AI-Powered Efficiency for QA Teams

As Koombea scaled, so did its QA teams, and with that, the challenge of maintaining high-quality deliverables across many projects. Existing tracking tools weren’t enough to provide the structure, visibility, and efficiency needed for a growing pipeline.

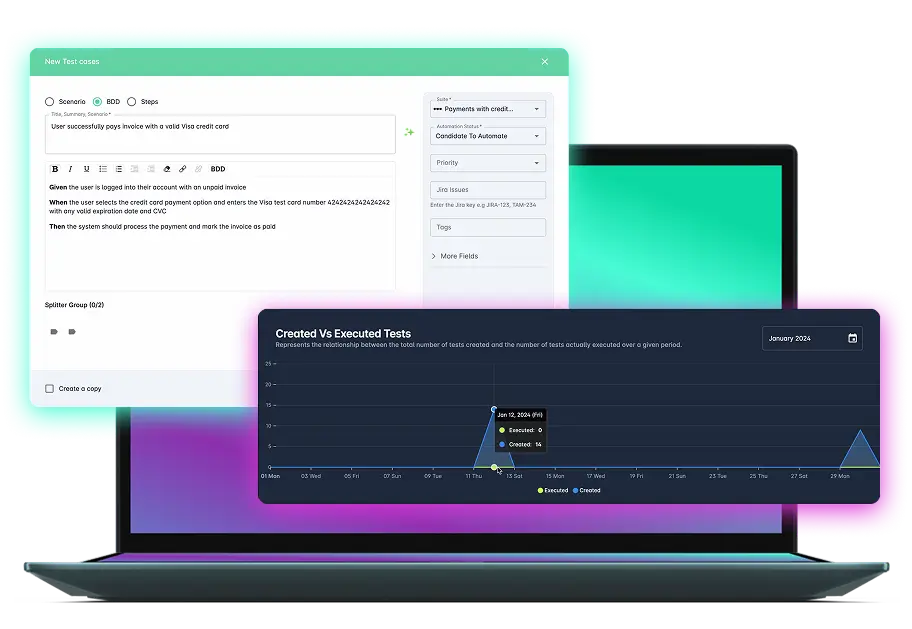

Tambora was born out of this need: a platform where test cases can be organized, tracked, and analyzed, while empowering QA engineers to automate one of the most time-consuming phases of testing: test design.

But the breakthrough came with the integration of AI-powered test case generation, transforming Tambora from a useful tracker into a productivity engine.

QA Process

Manual Design

Tambora Prototype

AI Assistant integration

Team Visibility

Scalable QA Tool

QA Process

Manual Design

Tambora Prototype

AI Assistant integration

Team Visibility

Scalable QA Tool

The Challenge: Evolving an App with Untapped Potential

Koombea’s internal product team led the design, development, and testing of Tambora, with a strong focus on delivering an intuitive user experience for both QA engineers and managers. Since then, its use has expanded across the entire operations team.

This included:

- Designing the platform to organize test cases into suites and folders by modules or features.

- Adding manual and execution tracking tools.

- Integrating Jira for linking test cases to specific tickets and priorities.ble AI infrastructures

- Embedding a Test Case AI Assistant using OpenAI’s API to generate structured test cases from a simple prompt.

- Auto-filling test inputs (e.g., credit card test data) based on context.

- Making all AI-generated test cases editable, categorized, and instantly actionable.

- Implementing the chatbot’s logic using LangGraph and Django Channels to enable real-time, event-driven communication.

- Supporting dynamic LLM selection, allowing users to choose their preferred AI model during a session.

“We didn’t just want AI that outputs text. We needed usable test cases directly inside the system. That’s what we built.”

How It Works: From Prompt to Structured Test Case

User in Tambora

Writes a functional requirement

AI Assistant Suggests Test Cases

(Positive/Negative/Edge)

User Edits, Assigns Priority, Links Jira, Confirms Module

Case is Stored, Tracked, and Executed Within the Platform

Managers See Project Coverage, Contributions, and Trends

User in Tambora

Writes a functional requirement

AI Assistant Suggests

Test Cases

(Positive/Negative/

Edge)

User Edits, Assigns Priority, Links Jira, Confirms Module

Case is Stored, Tracked, and Executed Within the Platform

Managers See Project Coverage, Contributions, and Trends

Why It Matters:

QA Engineers

- Save 50–75% of test design time

- Generate test cases in seconds

- Auto-fill contextual test data

- Eliminate tool-switching

Managers

- Analyze team and project performance

- Spot low activity or risks by user

- Track execution history by project

- Track execution history by project

Future Roadmap: From Test Cases to QA Knowledge

Tambora is evolving into a holistic operational quality platform.

Beyond AI-powered test case design, Tambora includes powerful modules like:

- Test Run: Used to plan all necessary QA activities before production releases, ensuring thorough coverage and execution clarity.

- Documents: A centralized space for operational certifications such as VPAT 2.5, Release Plan & Protocol, and Transition Plans. This allows any Operations team member to plan time off while assigning responsibilities and documenting expected deliverables and timelines for seamless handover.

Next on the roadmap is the Knowledge Library Module, trained on Koombea’s QA best practices. With this, engineers will be able to interact with Tambora as a QA strategy assistant, asking:

“How do I manage QA for multi-tenant apps?”

“What’s the best way to write a test plan for feature X?”

Tambora is no longer just a QA tool, it’s becoming a co-pilot for operational quality, empowering teams to maintain performance, documentation, and continuity across all areas of software delivery.

Building Smart Tools, Internally and Beyond

Tambora proves how AI can solve operational bottlenecks, without relying on third-party tools or off-the-shelf platforms. It’s a fully custom, production-ready system created by Koombea, for Koombea.

And it shows what’s possible when deep product knowledge meets AI engineering.

If your team is building AI-powered tools or needs help structuring processes, Koombea has the technical depth and execution capability to bring it to life.